Highlights

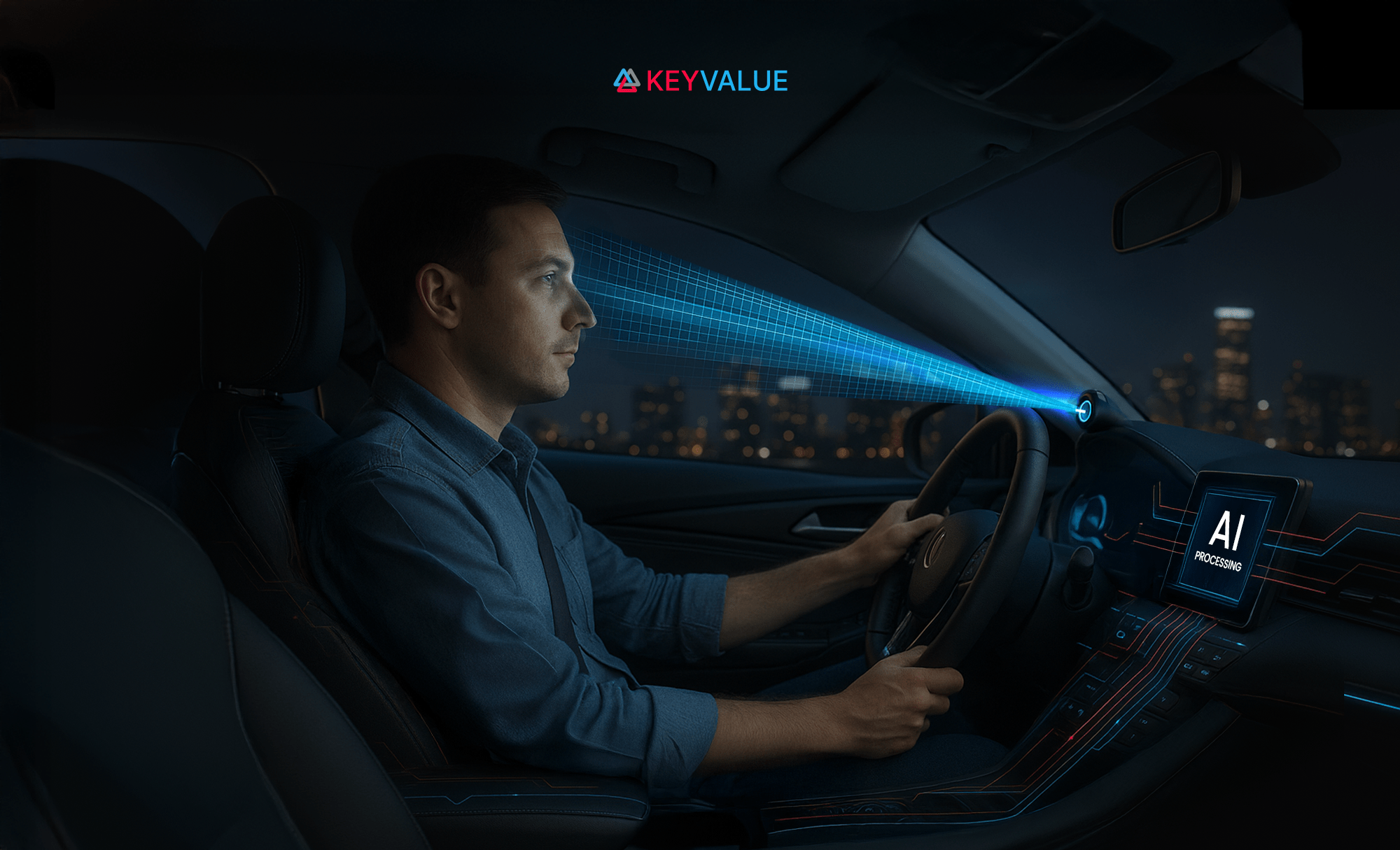

- AI-powered Driver Monitoring Systems (DMS) enhance road safety by detecting fatigue, distraction, and driver presence in real time without intrusive interaction.

- Dual-camera vision (door and steering cameras) combined with edge AI enables proactive comfort automation and safety alerts with millisecond response times.

- Computer vision models extract facial and posture landmarks to compute mathematical ratios, not identities, ensuring stringent privacy protection.

- Features processed locally on an edge device accelerate inference with ONNX and TensorRT optimization, eliminating cloud dependency and latency.

- Actionable outputs integrate seamlessly with the vehicle ECU to trigger seat adjustments and driver alerts while preserving full data confidentiality.

A quiet companion on the road

Picture this: You walk toward your car after a long, exhausting day. As you reach the door, the seat quietly begins to slide backward, readying itself for your entry. Once you sit, it glides back into your preferred position. No buttons pressed, no adjustments made. It feels as if the car already knew you were coming.

Later, during your drive home, the road begins to blur. Your eyelids feel heavy, and for a moment you lose focus. Suddenly, a gentle chime rings out, followed by a message on the display: “Driver alertness low. Please stay attentive.” You blink, straighten up, and continue driving safely.

What just happened? You didn’t touch a thing. You didn’t ask for help. Yet something, or rather, someone invisible was watching out for you.

That silent guardian is AI, quietly working behind the scenes in the form of a driver monitoring system.

Why cars need invisible AI?

Modern cars have become rolling computers. We see this in flashy infotainment screens, self-parking features, and lane-keeping assistance. But beneath all that, the most important task of technology is to protect the driver from themselves.

According to the U.S. National Highway Traffic Safety Administration (NHTSA), drowsy driving causes more than 90,000 crashes annually, leading to injuries and fatalities that could often be avoided. Unlike mechanical issues that can be fixed with engineering, human fatigue is unpredictable. That’s where AI comes in, not as a replacement for human drivers, but as a guardian co-pilot that spots danger before it happens.

The beauty of driver monitoring systems is that they’re not in your face. They don’t demand your attention like apps or notifications. Instead, they quietly gather data, analyze it in real time, and intervene only when necessary. This “invisible AI” design philosophy makes them effective without being intrusive.

Two eyes, one brain

At the heart of the system are two small cameras.

- The door camera: Positioned near the car door, it recognizes when someone approaches. It’s not just spotting a human but it calculates who is approaching and how far they are. This enables the system to prepare adjustments even before you sit down.

- The steering camera: Mounted near the steering wheel, this one continuously watches the driver’s face and posture. It’s not looking for personal details like identity or age; it’s monitoring behaviors and patterns; are your eyes open, your head upright, your posture steady?

Together, these cameras act like the “eyes” of the invisible AI. But eyes alone aren’t enough, you need a brain to make sense of the visuals. That’s where a powerful edge-computing device steps in.

The AI brain inside the car

Think of this edge AI platform as a powerful tiny supercomputer that sits quietly inside your car. It has one mission: process video feeds in real time and make instant decisions.

Why not just send data to the cloud? Because in cars, speed is everything. Imagine if your drowsiness alert took three seconds to process over the internet. By then, the risk might already have turned into an accident. With the edge computing device, all the heavy lifting happens inside the car itself, ensuring decisions are made in milliseconds.

Edge intelligence like this is the unsung hero of modern vehicles. It’s like having a highly skilled fast, responsive, and completely focused assistant sitting beside you without ever leaving the car’s cabin.

How the AI understands you?

Now comes the fascinating part.

How does the AI actually “understand” a human driver?

It doesn’t store your face or create a database of identities. Instead, it uses a combination of computer vision models and smart mathematical computations to interpret what it sees.

- Step 1: Detection models

The system uses cutting-edge real-time object detectors like YOLOv11. Think of these as security guards at a busy train station who are able to spot people in a crowd instantly. Their job is to recognize that a person is here and localise their exact position.

- Step 2: Landmark extraction

Once a person is detected, the AI extracts key facial and body landmarks; points like eyes, nose, mouth, shoulders, hips, ankles etc. If you’ve ever seen dots on a motion capture suit in movies, this works the same way. The AI reduces you to a “digital skeleton” that it can analyze without needing your actual photo.

- Step 3: Ratios, Not identities

Here’s where privacy and intelligence meet. Instead of saying “This is Alice”, the system thinks in terms of numbers: shoulder-to-hip ratio, eye aspect ratio (how open or closed your eyes are), head tilt angle. These 12 carefully engineered features form the AI’s vocabulary is used for describing a driver.

- Step 4: Smart predictions and classification

These features are then fed into a machine learning model. We use XGBoost. Think of XGBoost as a referee making the call: is the driver present, approaching, or looking dangerously sleepy? The model then predicts the driver's current state, allowing the system to trigger appropriate safety alerts or actions.

- Step 5: Speed optimization

Finally, models are converted into formats like ONNX and accelerated using TensorRT. If AI is an orchestra, TensorRT is the conductor, ensuring every instrument plays at exactly the right speed. The result: the system can process video streams at real-time speed, without missing a beat.

What happens with the output?

Once the AI has done its job, it produces simple, actionable outputs:

- Driver detected, 2.5 meters from the door.

- Driver seated, adjust seat to profile.

- Drowsiness detected, issue alert.

These outputs are sent to the car’s ECU (Electronic Control Unit), which acts as the car’s command center. The ECU then triggers the appropriate actions: moving the seat, displaying a warning, or playing an alert sound.

It’s worth noting that the AI system itself doesn’t control the hardware but it simply provides the intelligence. The integration with seat systems and alerts is handled by automotive engineers via the ECU.

The invisible part

Here’s what makes this AI so different: you don’t interact with it directly.

- There’s no app to open.

- There’s no button to press.

- There’s no complicated setup.

It just works silently, in the background. You experience it only as comfort (when your seat adjusts automatically) or as safety (when it alerts you at the right time).

In many ways, it’s the opposite of flashy tech—it’s invisible AI, designed to serve, not to show off.

The balancing act: Privacy vs safety

One common concern with in-car AI systems is privacy. “If a camera is watching me all the time, what happens to my data?”

The design here addresses this head-on:

- No images are uploaded to the cloud.

- Only mathematical features (ratios, distances, angles) are used.

- All computation happens locally on the Jetson AGX device.

This ensures that the system enhances safety without becoming a privacy risk.

A final thought

The next time your car quietly adjusts your seat or nudges you awake during a late-night drive, pause for a moment. Behind the scenes, an orchestra of cameras, models, and algorithms is working in perfect harmony—so seamlessly that you barely notice it.

That’s the power of invisible AI. It’s not there to show off. It’s there to serve you—silently, reliably, and in real time.

FAQs

1.What is a Driver Monitoring System (DMS)?

A Driver Monitoring System is an in-cabin AI solution that tracks driver alertness, posture, and behavior using cameras and computer vision, providing real-time safety alerts and comfort automation.

2.How does the system detect drowsiness or distraction?

The AI analyzes facial landmarks, eye-blink ratios, head tilt, and posture dynamics through machine learning models to classify driver states and identify early signs of fatigue or inattention.

3.Does the system capture or store personal images?

No. The system converts video into mathematical representations such as distances and angles. It does not store faces or transmit images to the cloud, ensuring privacy compliance.

4.Why is edge AI used instead of cloud processing?

Safety decisions must occur instantly. Edge processing eliminates connectivity dependency and supports millisecond-level inference, which is critical to prevent accidents.

5.What happens when the AI detects risk?

The model outputs actionable signals such as drowsiness detected or driver approaching sent to the car’s ECU to trigger alerts or automated adjustments.

6.Can the system control the car?

No. The AI only provides intelligence and state classification. Hardware control is executed exclusively through the vehicle’s Electronic Control Unit.

7.What makes this approach different from traditional assistance tech?

DMS operates silently in the background without requiring driver interaction. It focuses on preventative safety and personalized comfort through invisible, real-time intelligence.