Highlights

- Siren redefines chatbots by moving beyond simple conversations to orchestrate complex, multi-step campaign management workflows using LangGraph.

- Multi-intent routing and three-layer memory architecture enable Siren to process parallel intents, retain long-term context, and deliver human-like interaction flow.

- Funnel-Sync orchestration and modular node architecture ensure consistent, reliable execution without race conditions, even across 50+ specialized nodes.

- The result: a scalable, deterministic AI system that manages entire campaign lifecycles with the reliability of traditional software and the flexibility of natural language.

The problem: Why traditional chatbots fail at campaign orchestration

Most chatbots today follow a predictable pattern. They answer FAQs, summarize documents, maybe book a meeting if you've connected the right APIs. But what happens when users expect more? What happens when they say:

"Create a summer sale campaign, use email and SMS channels, target users in California and New York, schedule it for every Monday at 10 AM, and oh, also set up a test run for tomorrow."

Traditional chatbot architectures collapse under this weight. They process intents sequentially, lose context between steps, and force users into frustrating command-by-command interactions. After building notification systems for 8 years and for more than 50 products, we knew there had to be a better way.

That's why we built Siren — not just another AI chatbot, but a complete reimagining of how conversational AI can orchestrate complex, multi-step campaign management workflows.

The journey: From simple chatbot to AI-powered campaign orchestration platform

Starting point: Understanding the real challenge

When we began developing Siren, we identified three fundamental limitations in existing chatbot architectures:

Sequential processing trap: Traditional bots handle one intent at a time. Users, however, think in parallel. They don't want to issue five separate commands to set up a campaign — they want to describe their goal and have the system figure out the execution path.

Context amnesia: Most chatbots suffer from severe memory limitations. They might remember the last few messages, but ask about a campaign you configured last week? Good luck. This forced users to repeat information constantly, destroying the illusion of intelligent conversation.

Orchestration chaos: Even when bots could parse multiple intents, executing them reliably was another story. Race conditions, state conflicts, and unpredictable execution orders made complex workflows feel like rolling dice.

The architecture: How we built the AI campaign orchestration engine

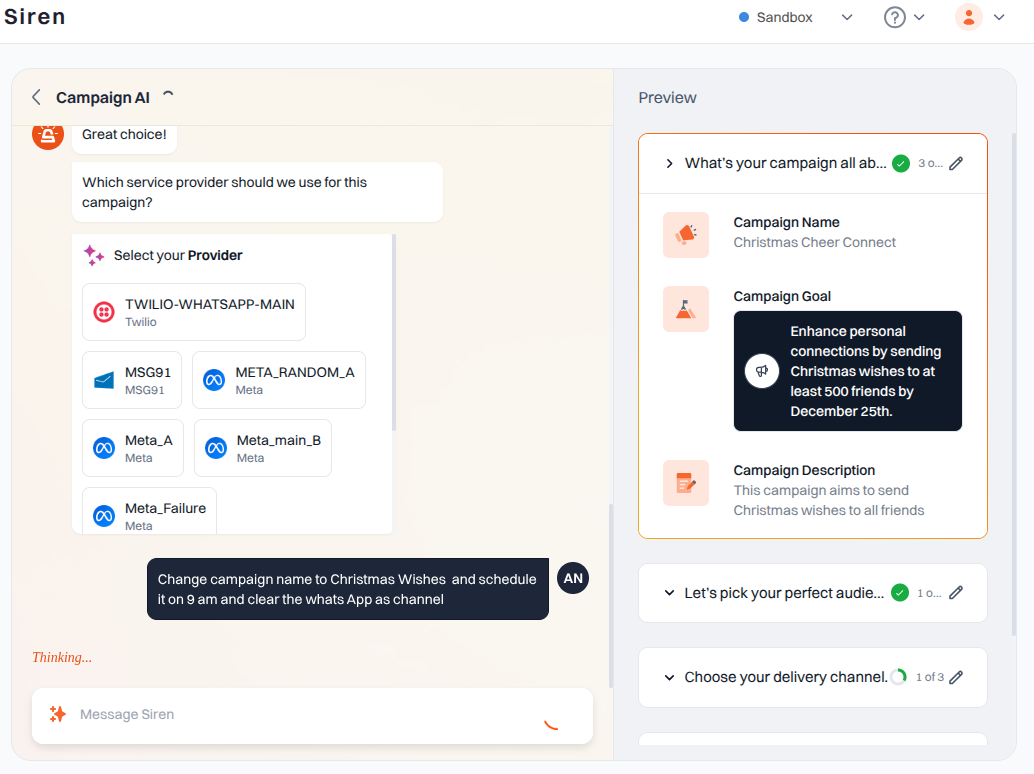

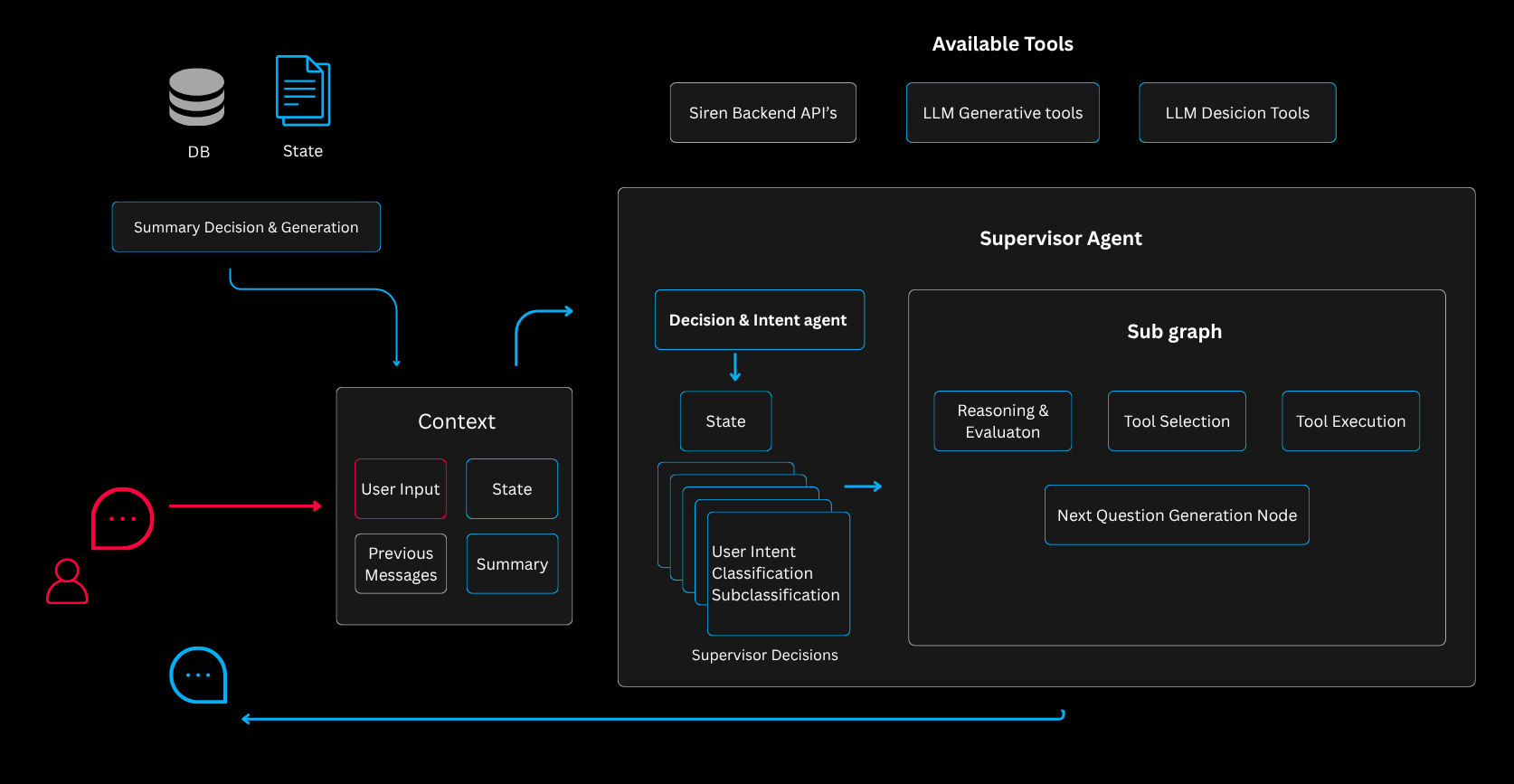

Multi-intent routing with LangGraph

The breakthrough came when we realized we needed to fundamentally rethink how user input flows through the system. Instead of sequential processing, we built a supervisor-based routing system that understands the full scope of user intent before taking action.

Here's how it works in practice. When a user provides that complex campaign request, our supervisor agent immediately decomposes it into discrete, parallel-executable decisions:

[

SupervisorDecision(

classification="campaign_trigger_details",

user_intent="add",

campaign_sub_classification="start_time",

value_provided=True

),

SupervisorDecision(

classification="campaign_details",

user_intent="edit",

campaign_sub_classification="campaign_name",

value_provided=True

),

SupervisorDecision(

classification="campaign_channel",

user_intent="clear",

campaign_sub_classification="channel_selection",

value_provided=False

)

]

Each decision routes to its own specialized subgraph, executing in parallel. This isn't just about speed — it's about maintaining context and allowing natural, human-like interaction patterns. The magic happens in our routing logic, which dynamically maps intents to execution nodes.

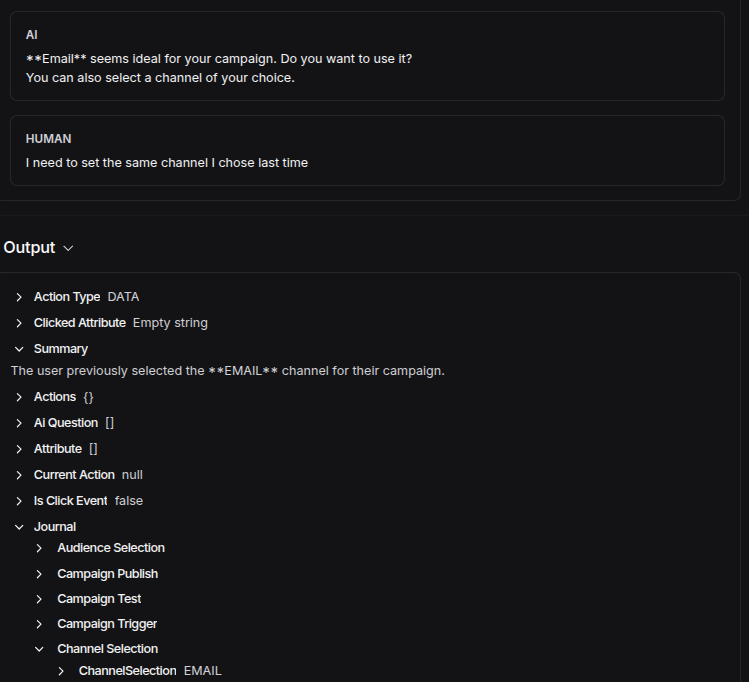

The memory architecture: Three layers of context

One of our most significant innovations was recognizing that memory isn't monolithic — different types of information have different lifespans and access patterns. We developed a three-tier memory architecture that mirrors how humans actually think about ongoing tasks.

class CampaignJournal(TypedDict):

details: CampaignDetails

audience_selection: AudienceSelection

channel_selection: ChannelSelection

message_template: MessageTemplate

campaign_test: CampaignTesting

campaign_trigger: CampaignTrigger

campaign_publish: CampaignPublish

class CampaignMainAgentState(TypedDict):

ai_question: Annotated[List[BaseMessage], replace]

attribute: Annotated[List[str], replace]

options: Annotated[List[List[str]], replace]

is_click_event: Annotated[bool, replace]

# Layers for Context

messages: Annotated[List[BaseMessage], add_messages]

journal: Annotated[CampaignJournal, merge_dict]

summary: Annotated[str, replace]

Immediate memory (messages): This captures the current conversation flow. It's ephemeral, focusing on the immediate back-and-forth between user and system. When you say "same time as yesterday," the system knows exactly what you mean.

Persistent journal: This is where campaign truth lives. Every decision, every configuration, every test result gets stored here in a structured format. Users can leave mid-configuration and return days later to find everything exactly as they left it.

Compressed summary: For long-running campaigns or users with extensive history, we compress older interactions into semantic summaries. This keeps token counts manageable while preserving essential context.

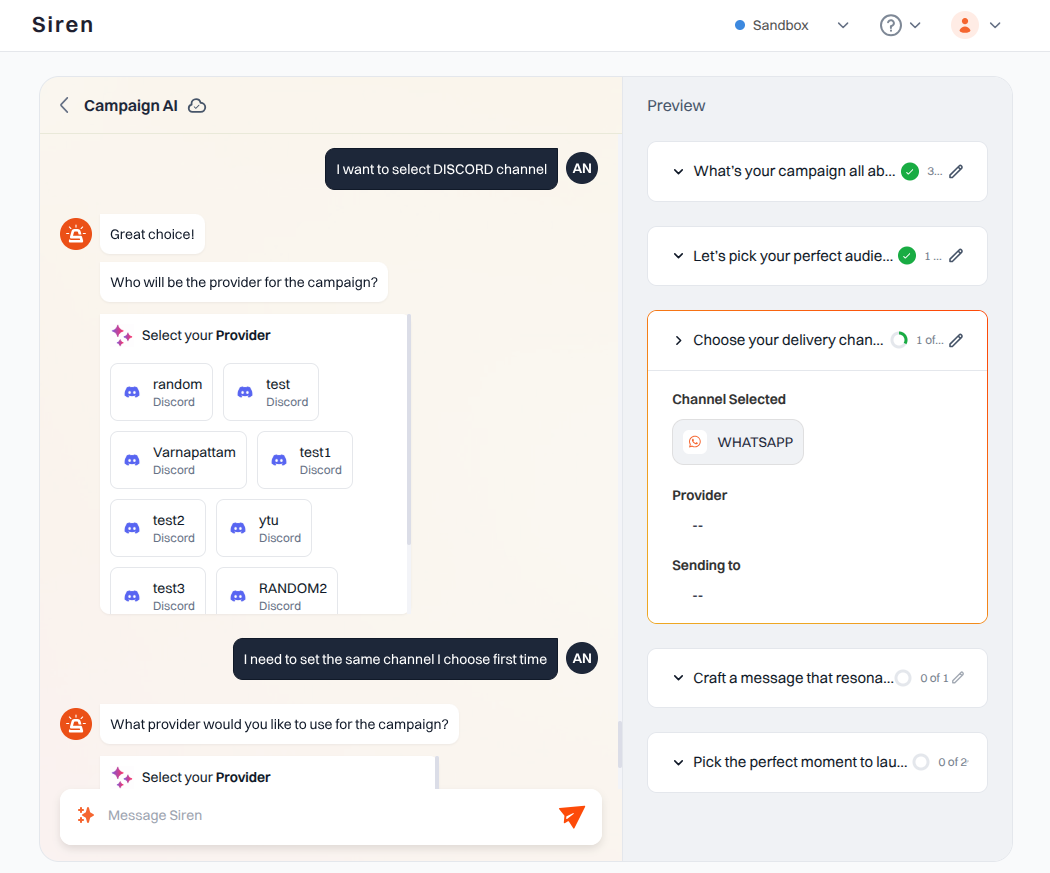

The parallel universe: Decision tree orchestration in campaign management

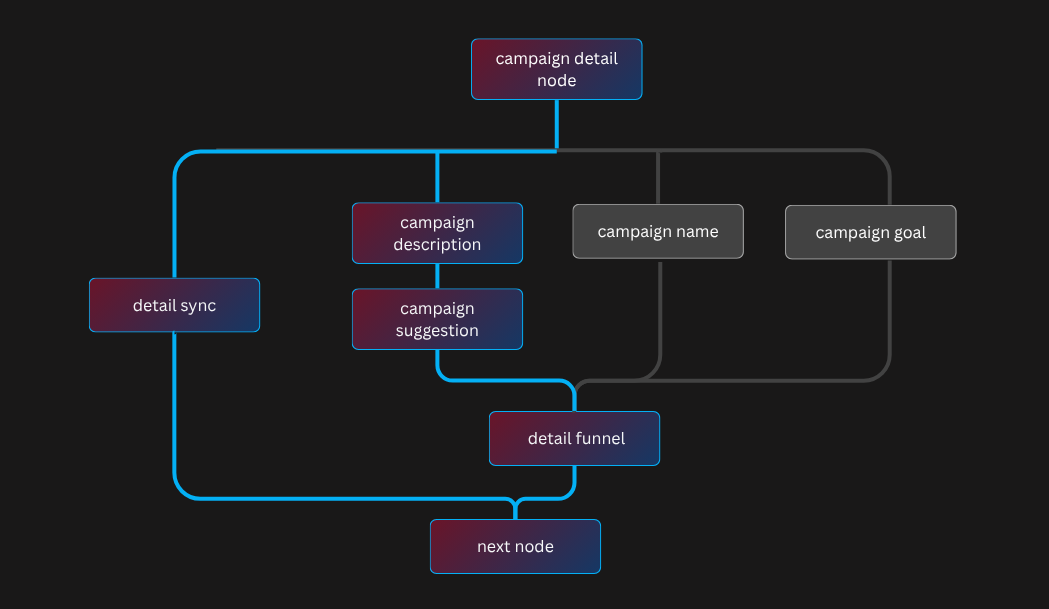

As Siren grew, we faced a classic software engineering challenge: how do you add features without creating a maintenance nightmare? Our solution was what we call the "parallel universe" architecture — a modular system of specialized nodes that can be combined dynamically.

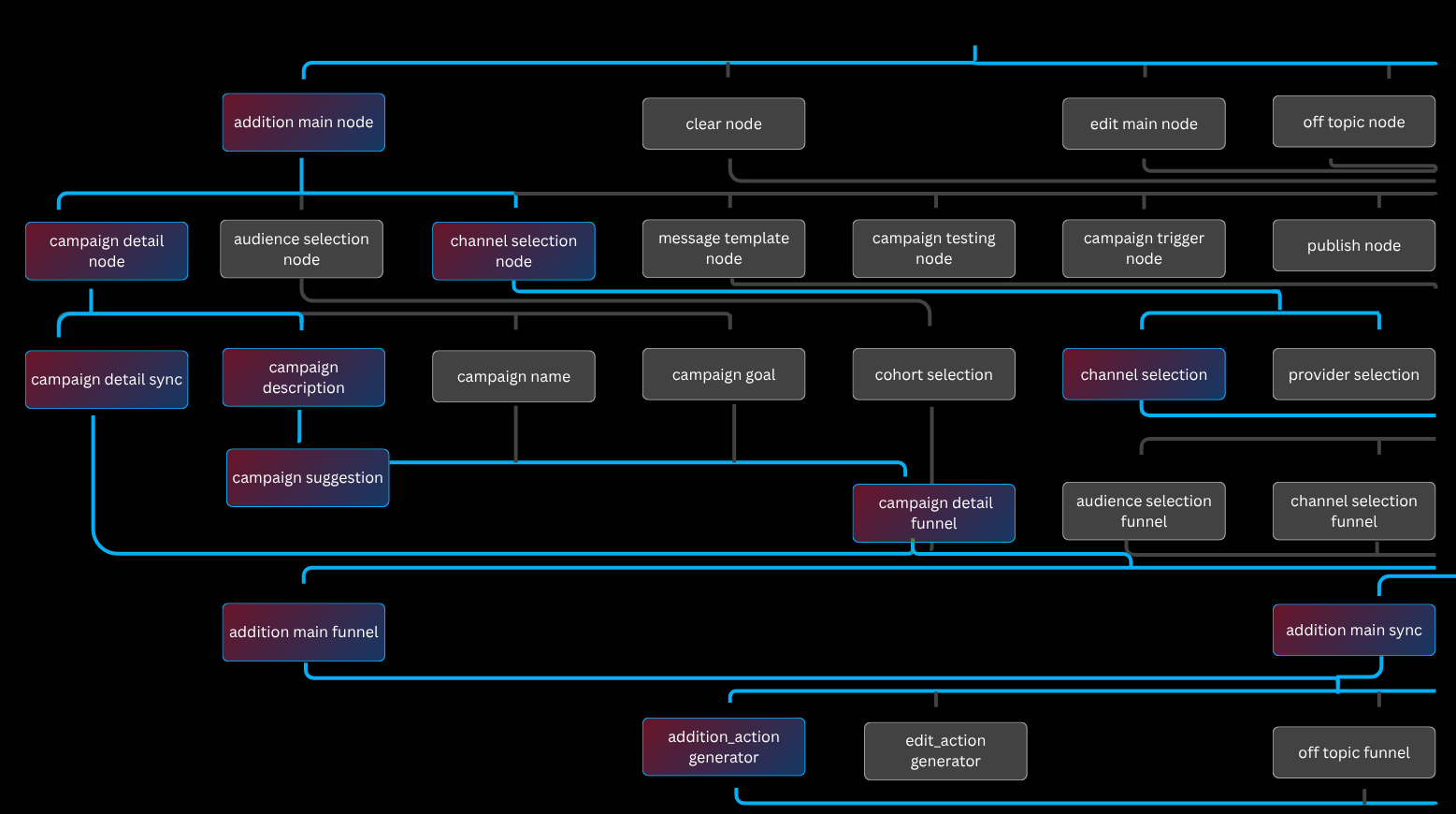

Currently, Siren orchestrates over 50 specialized nodes, each handling a specific aspect of campaign management:

- Campaign configuration nodes: name, description, goals, templates

- Channel management nodes: email, SMS, WhatsApp, Slack, and their providers

- Audience nodes: cohort selection, filtering, segmentation rules

- Scheduling nodes: triggers, recurring schedules, time zones

- Testing & publishing nodes: test sends, approval workflows, publication

The beauty of this approach is its composability. New features simply mean new nodes. Want to add Discord support? Create a new channel node. Need A/B testing? Add test variant nodes. The core orchestration logic remains untouched.

def generate_node_list(state_values, mapping, default_node, sync_node: str = None) -> list[str]:

nodes = [mapping.get(val, default_node) for val in state_values]

if sync_node:

nodes.append(sync_node)

return nodes if nodes else [default_node]

async def campaign_addition_intention_router(state: CampaignAgentState):

# To add a new feature or node, simply create the node & edges and append it to the mapping.

classification_map = {

ClassificationKeys.CAMPAIGN_DETAILS: "campaign_details_main_add",

ClassificationKeys.CAMPAIGN_COHORTS: "cohort_main_add",

ClassificationKeys.CAMPAIGN_CHANNEL: "channel_main_add",

ClassificationKeys.CAMPAIGN_TRIGGER_DETAILS: "schedule_main_add",

}

return generate_node_list(

state["classification"], classification_map, "off_topic_node_add", "add_sync"

)

Funnel-sync parallelism: Preventing the race condition nightmare

Parallel execution sounds great until you realize that some operations complete in milliseconds while others take seconds. Without careful orchestration, you end up with race conditions that corrupt state or produce inconsistent results.

We solved this with a pattern we call Funnel-Sync Orchestration:

The Funnel Node acts as a collection point, gathering results from all parallel branches. Only when every branch completes does execution proceed to the Sync Node, which aligns state before moving forward. This guarantees consistency without sacrificing parallelism's performance benefits.

Results: How Siren powers reliable campaign orchestration

The impact of this architecture goes beyond technical elegance. For our users, it means:

Natural conversation flow: Users describe what they want in their own words, using as many or as few commands as feel natural. The system adapts to them, not the other way around.

Reliable multi-step workflows: Complex campaign configurations that previously required careful step-by-step execution now happen automatically, with consistent results every time.

True persistence: Start a campaign on Monday, refine it on Wednesday, test on Friday, and publish next week. Every decision, every configuration is remembered and ready.

Lessons: From building a LangGraph-powered campaign management chatbot

Building Siren taught us several crucial lessons about LLM-powered orchestration:

Determinism matters more than you think: While it's tempting to let LLMs freely choose tools and paths, deterministic orchestration provides the reliability users actually need. Creativity in understanding; precision in execution.

Memory is multi-dimensional: Don't treat context as a single buffer. Different information has different lifespans, access patterns, and importance levels. Design your memory architecture accordingly.

Parallelism requires choreography: Simply running things in parallel isn't enough. You need explicit coordination patterns to prevent race conditions and ensure consistency.

Conclusion: The future of AI-powered workflow orchestration

The future of conversational AI isn't about better small talk — it's about building systems that can understand complex intentions and orchestrate sophisticated workflows reliably. With LangGraph's power, deterministic orchestration, and thoughtful architecture, we've shown that chatbots can evolve into true AI-powered automation platforms.

Siren isn't just handling conversations; it's orchestrating entire campaign lifecycles with the reliability of traditional systems and the flexibility of natural language. That's the real promise of next-generation AI agents.

AI is evolving. So are we. At KeyValue's AI lab, we’re shaping the next frontier of artificial intelligence. Let’s build the future together.

FAQs

- What is a campaign management system?

A campaign management system is a platform that automates the planning, coordination, and execution of multi-step marketing campaigns across different channels. It integrates tools for audience targeting, scheduling, testing, and performance tracking, ensuring campaigns run efficiently, consistently, and with minimal manual intervention.

- What is LanGraph used for?

LangGraph is used to design and manage complex, multi-step workflows for AI systems. It enables developers to build structured, deterministic orchestration between different agents, tools, and tasks. LangGraph allows parallel intent handling, context retention, and controlled execution, making it ideal for applications like AI assistants, chatbots, and automation platforms that require reliability, coordination, and scalability.

- What makes Siren AI Campaign Chatbot different from traditional chatbots?

Siren goes beyond basic conversations to orchestrate complex, multi-step campaign management workflows using LangGraph, enabling reliable, context-aware, and automated execution.

- How does LangGraph improve AI-driven campaign management?

LangGraph powers Siren’s multi-intent routing and parallel orchestration, allowing the system to process multiple user intents simultaneously while maintaining context and workflow reliability.

- Why is deterministic orchestration important in AI systems?

Deterministic orchestration ensures AI actions follow a predictable, controlled flow, preventing race conditions and ensuring consistent, reliable outcomes in complex campaign workflows.