Highlights

- Model Context Protocol (MCP) is Anthropic’s open-source standard that lets AI models securely access tools and live data in real time.

- Instead of static LLMs that rely only on training data, MCP enables dynamic, context-aware AI systems that can retrieve information and act instantly.

- Developers can now build MCP servers and clients that speak the same protocol – powering smarter, more adaptive AI applications.

As Large Language Models (LLMs) become more deeply embedded in business workflows, one persistent limitation remains: their inability to access real-time, external context. Relying solely on training data leaves models outdated, often resulting in incomplete or inaccurate outputs.

That’s where the Model Context Protocol (MCP) steps in.

Developed by Anthropic, MCP is an open-source AI model context protocol designed to standardize how LLMs interact with tools and external data—securely, consistently, and in real-time. Whether you’re trying to build an MCP server for your app or exploring MCP use cases for enterprise AI, this protocol aims to change how developers and organizations integrate intelligence into their products.

Why does the Model Context Protocol (MCP) matter for AI developers?

Modern AI applications need more than smarts—they need situational awareness. From live dashboards to dynamic chatbots, the demand for real-time AI is everywhere.

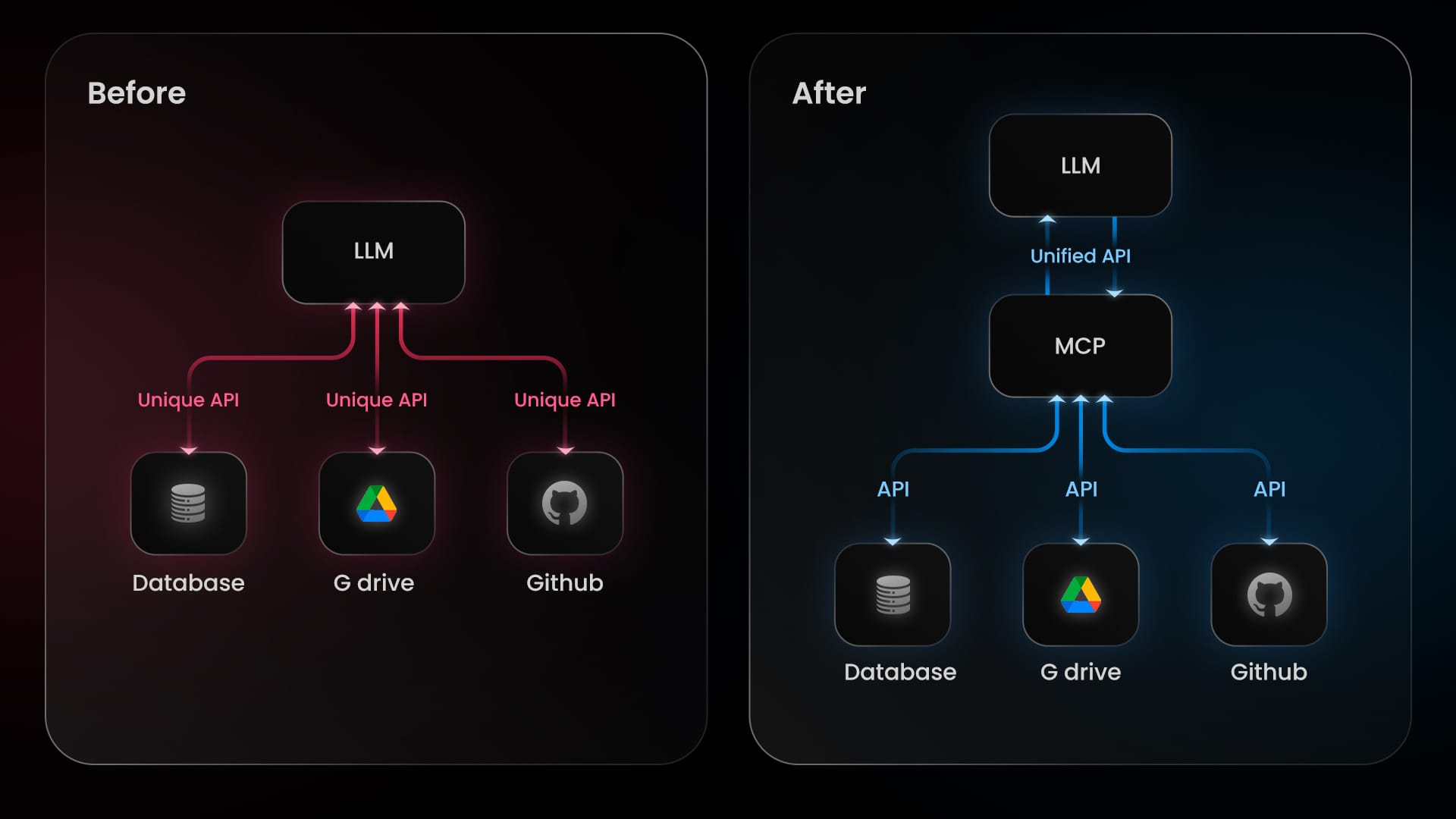

Before MCP, developers had to create custom connectors for every new tool or data source. It was repetitive, brittle, and hard to scale. MCP eliminates that friction by providing a unified interface for tool discovery, context retrieval, and action execution.

The result? AI systems that are no longer static responders but adaptive agents—capable of evaluating and using external resources on the fly.

MCP vs Traditional API’s

| Aspect | Model Context Protocol (MCP) | Traditional API |

|---|---|---|

| Definition | Protocol where tools and context are embedded into model interaction | Method for exchanging data between systems |

| Setup | One universal standard | Custom setup for each system |

| Flexibility | Dynamic, real-time, and adaptable | Rigid and specific |

| Example Use Cases | AI tool execution, contextual data retrieval, live actions | Web services, data sharing |

| Tool Discovery | Automatic | Manual |

Before and after MCP

How does the Model Context Protocol (MCP) work in real-world AI systems?

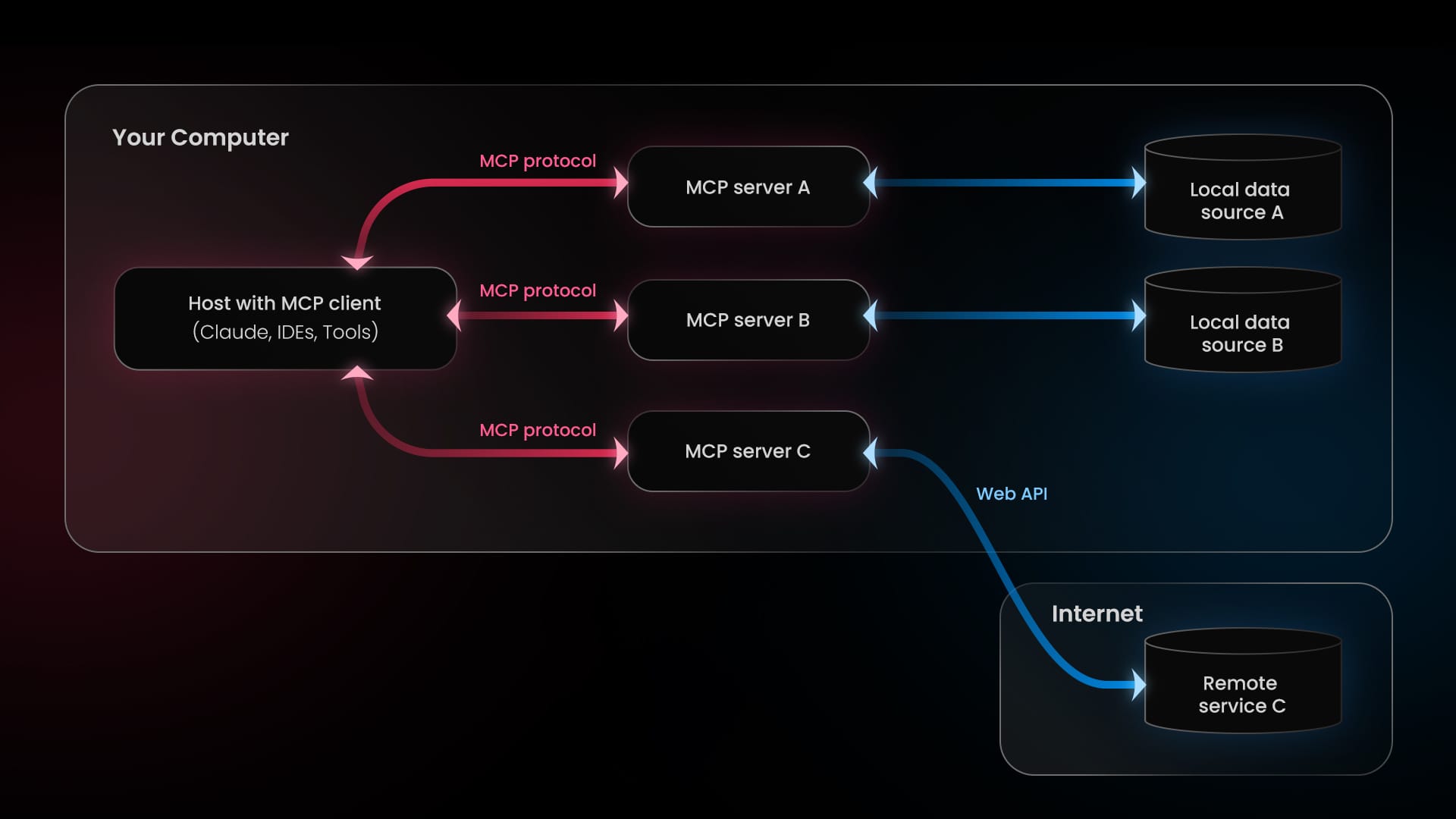

Think of MCP as a client-server architecture for intelligent systems. The client (host)—often a tool like Claude or an AI-powered app—initiates the connection. The MCP server provides access to external data, tools, and context.

By following the MCP specification, developers can reliably build MCP clients and servers that speak the same language, resulting in consistent interactions between models and external systems.

This setup ensures your AI not only "knows" things but also does things.

What is the architecture of the Model Context Protocol (MCP)?

At its core, MCP follows a client-server architecture where a host application can connect to multiple servers:

- MCP hosts: Programs like Claude Desktop, IDEs, or Al tools that want to access data through MCP

- MCP clients: Protocol clients that maintain 1:1 connections with servers

- MCP servers: Lightweight programs that each expose specific capabilities through the standardized Model Context Protocol

Core components of the Model Context Protocol

- Tools

Tools let LLMs execute actions. Think of them as functional plugins.

@mcp.tool()

async def conversion(input_unit: str, output_unit: str, value_to_convert: float) -> str:

"""Perform a conversion between two units.

Args:

input_unit: The unit of the value to be converted.

output_unit: The desired unit after conversion.

value_to_convert: The numerical value to convert.

"""

if input_unit == "UNIT_A" and output_unit == "UNIT_B":

# Perform specific conversion logic from UNIT_A to UNIT_B

converted_value = value_to_convert * SOME_FACTOR

result = f"{value_to_convert} {input_unit} is equal to {converted_value} {output_unit}"

elif input_unit == "UNIT_C" and output_unit == "UNIT_D":

# Perform specific conversion logic from UNIT_C to UNIT_D

converted_value = value_to_convert / ANOTHER_FACTOR

result = f"{value_to_convert} {input_unit} is equal to {converted_value} {output_unit}"

else:

result = f"Conversion from {input_unit} to {output_unit} is not supported."

return resultThese tools are callable by AI to perform real-world tasks, like calculations or API calls.

- Resources:

Resources supply contextual data—text files, PDFs, logs, or binary content—that the model can read during execution.

# resource template expecting a 'user_id' from the URI

@mcp.resource("db://users/{user_id}/email")

async def get_user_email(user_id: str) -> str:

"""Retrieves the email address for a given user ID."""

# Replace with actual database lookup

emails = {"123": "alice@example.com", "456": "bob@example.com"}

return emails.get(user_id, "not_found@example.com")

- Prompts:

Prompt templates enable reusable content generation strategies.

@mcp.prompt()

def ask_review(code_snippet: str) -> str:

"""Generates a standard code review request."""

return f"Please review the following code snippet for potential bugs and style issues:\n```python\n{code_snippet}\n```"

Use these to structure consistent, intelligent responses.

- Roots

Roots define the operational scope—e.g., what directories or endpoints the MCP server can use.

from fastmcp.client.transports import SSETransport

import asyncio

transport_explicit = SSETransport(url="http://localhost:8000/sse")

async def client_example():

# Connect to a running MCP server and specify the roots we want to use

async with Client(

transport_explicit, roots=["file:///home/user/projects/"]

) as client:

They help maintain guardrails and enforce context boundaries.

- Sampling

Sampling allows the server to delegate text generation to the client’s LLM, while keeping business logic centralized.

@mcp.tool()

async def generate_content(topic: str, context: Context) -> str:

"""Generate content about the given topic."""

# The server requests a completion from the client LLM

response = await context.sample(

f"Write a paragraph on the given {topic}",

)

return response.text- Transports

Transports are the messaging layer. MCP uses JSON-RPC over protocols like stdio and SSE.

from fastmcp.client.transports import (

SSETransport,

StdioTransport

)

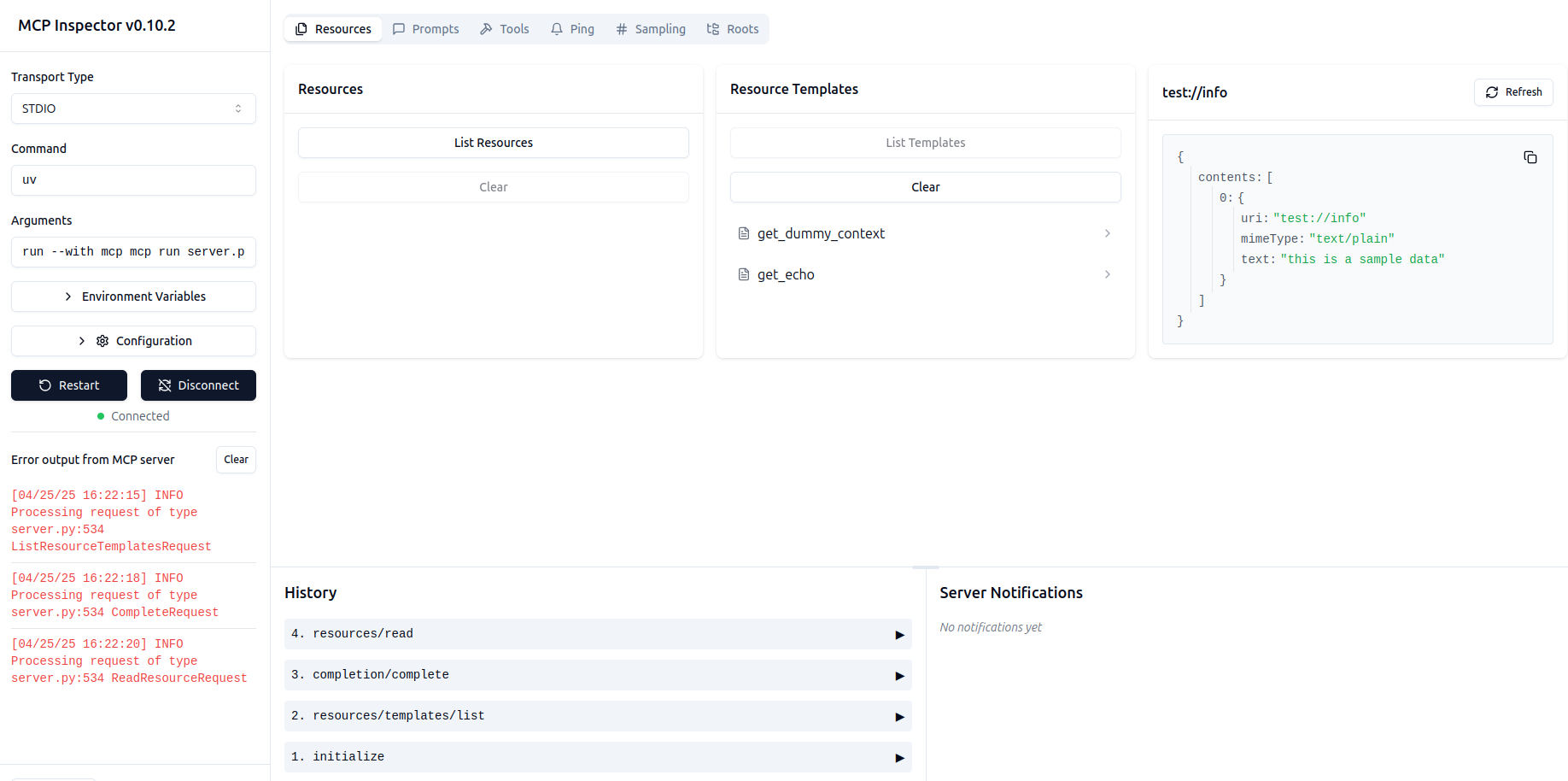

How can you inspect and test your MCP server setup?

Once MCP server is running we can inspect it through Inspector available on local host

Building with MCP: FastMCP SDK

The easiest way to get started is with FastMCP, the official Python SDK.

It helps you:

- Build an MCP client or server

- Register tools, resources, and prompts

- Handle protocol communications without boilerplate

FastMCP supports stdio and Server-Sent Events (SSE) out of the box, and it's actively maintained.

Explore the MCP documentation for examples and advanced setups.

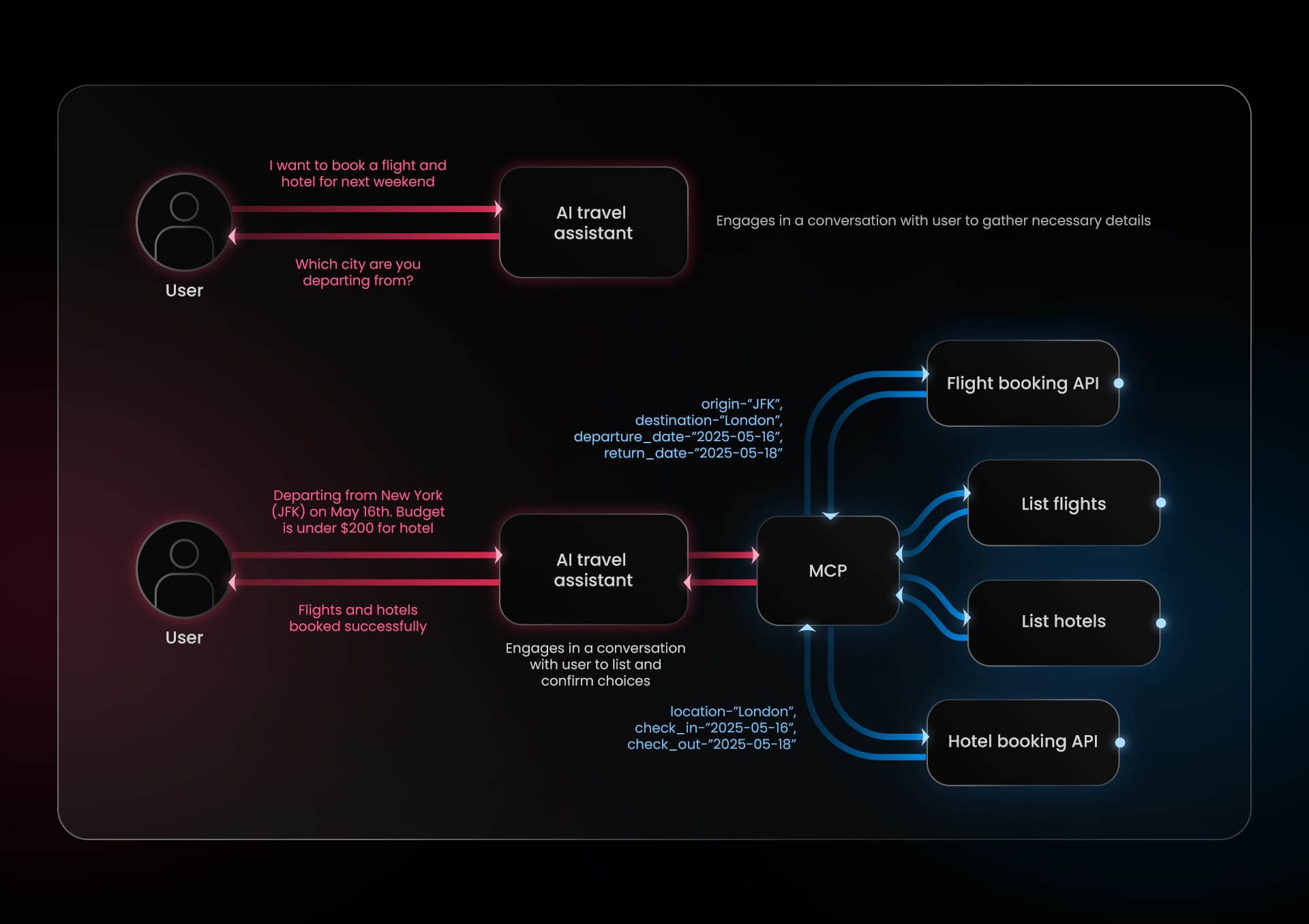

Real-world example: Online travel agency

Let’s look at one of the more compelling MCP use cases in action.

The problem

A mid-sized travel agency wanted to improve its AI assistant. Customers asked questions like:

- “Is there a hotel available in Tokyo tonight?”

- “What’s the weather like in Paris this weekend?”

Their existing system couldn’t respond accurately—it lacked live data access.

The solution

Using MCP, the agency created a setup where the AI could:

- Autonomously discover tools and APIs

- Evaluate tool relevance for each query

- Pull real-time weather, flight, and accommodation data

The impact

The assistant became a context-aware decision-maker. Customers got relevant answers in seconds—without human intervention. The company saw improved satisfaction and a drop in support tickets.

This is the power of MCP for LLM integrations done right.

The road ahead

The ModelContextProtocol GitHub repo is where development is actively happening. Expect ongoing contributions in:

- Tool and platform integrations

- Security enhancements

- Developer experience improvements

Whether you're just exploring or ready to build an MCP server for production, now is a great time to get involved.

Looking to build intelligent, context-aware systems with real-time AI capabilities?

At KeyValue, our engineers design scalable AI architectures that integrate protocols like MCP to create smarter products. Let’s talk!

Final thoughts

MCP isn’t just another API—it’s an operating layer for intelligent agents. If you're serious about building LLM applications that are flexible, scalable, and smart, mastering the model context protocol will give you a massive edge.

Start small. Build. Contribute. And let your AI agents do more—with less.

At KeyValue, we’re already exploring ways to integrate MCP across real-world use cases—helping our partners build smarter, more adaptive AI systems.

Frequently Asked Questions

1. What is MCP vs API?

MCP (Model Context Protocol) standardizes how AI models interact with tools and external data, while an API is a general method for exchanging data between systems. Unlike APIs that need custom integrations for each use case, MCP provides a single, unified interface that allows models to securely discover and use tools in real time.

2. What is the use of MCP?

MCP enables large language models (LLMs) to access external context, data, and actions dynamically. It’s used to build smarter AI systems—like assistants, dashboards, and automation agents—that can fetch real-time data and perform tasks beyond their static training.

3. What is Model Context Protocol in Kubernetes?

In Kubernetes environments, MCP can act as a bridge for AI systems to interact with live infrastructure data or tools running in clusters. Developers can deploy MCP servers alongside microservices to let AI agents query metrics, logs, or configurations securely and efficiently.

4. What is the difference between MCP and LLM?

MCP is a protocol, while an LLM is a model. The LLM generates responses using language understanding, whereas MCP defines how that model connects to tools and data sources. Together, they create context-aware AI systems capable of reasoning and acting with live information.

5. Which LLM supports MCP?

Anthropic’s Claude models natively support MCP integration, allowing them to connect to external tools and servers through the protocol. As the ecosystem grows, more LLM frameworks are expected to adopt MCP for real-time context access.